PART I: What Actually Happened with China's Claude-Powered Cyber-Espionage?

I just finished up reading through the Anthropic's explanations of what they are calling the "first reported AI-orchestrated cyber espionage campaign". These words all sound big and scary, so I'm hoping to cut through some of the click-bait titles for anyone who may be interested. So I've written a two part blog on what happened, and why it matters. This will be Part I explaining what Anthropic tells us actually happened, and what to do about it. Click here for part two on why we should care about how we communicate these risks.

What Happened

Given the titles of the blog post and extended report ("Disrupting the first reported AI-orchestrated cyber espionage campaign"), I imagine many people might read headlines and think "China is using AI to create and deploy totally autonomous zero-day attacks". Fortunately for us, this is not the case.

Broadly, what happened here was a Chinese threat group, GTG-1002, used Claude to orchestrate Model Context Protocol (MCP) servers to target "roughly 30 entities", with only "a handful of successful intrusions." Now, there are a few important distinctions and definitions in this sentence that I'll highlight here:

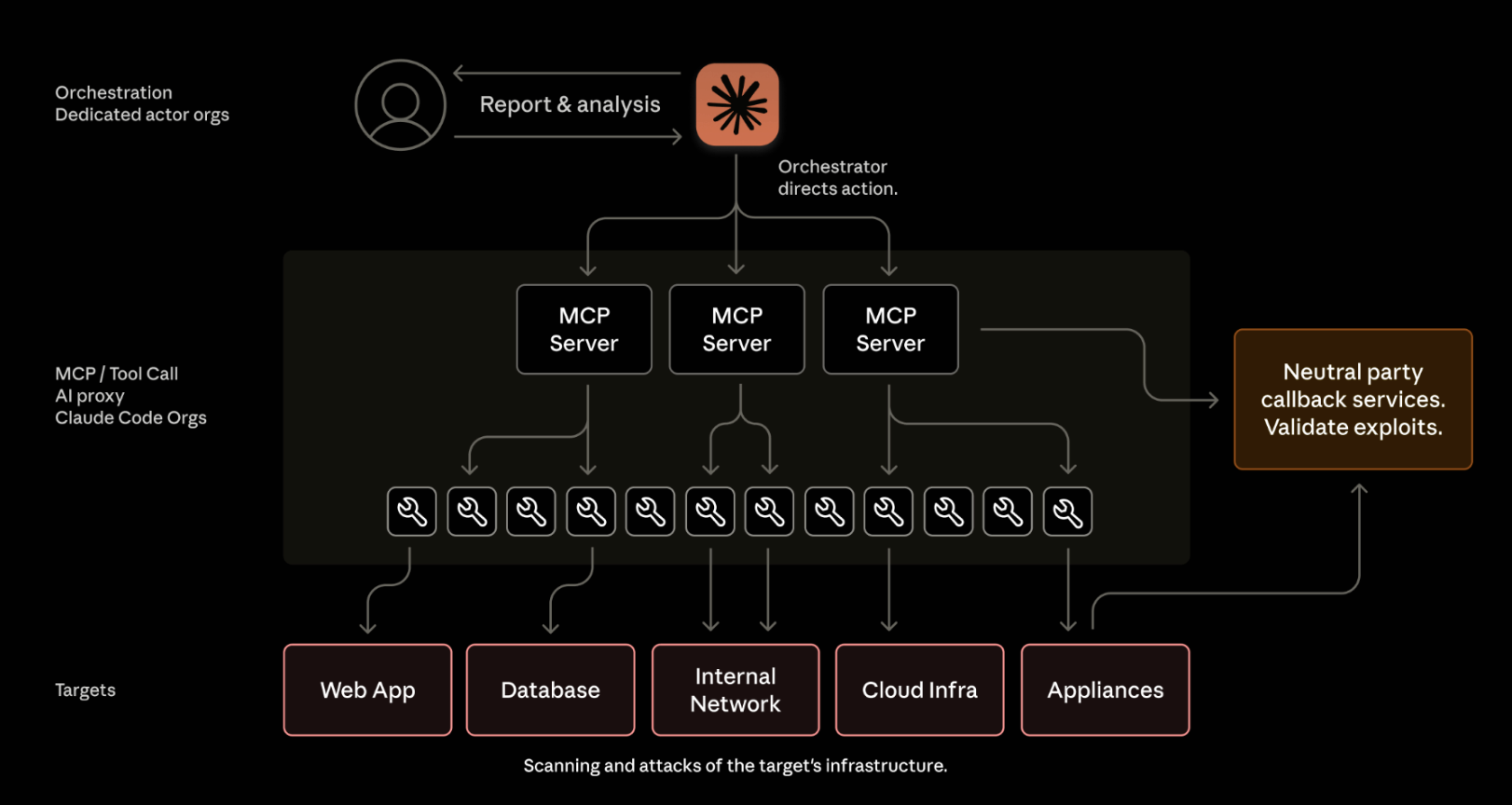

- Claude was used as an orchestrator of a custom developed MCP servers. In this case, Claude was generating targets, issuing commands to MCP servers to trigger actions, and analyzing action outputs. See below for Anthropic's diagram:

Source

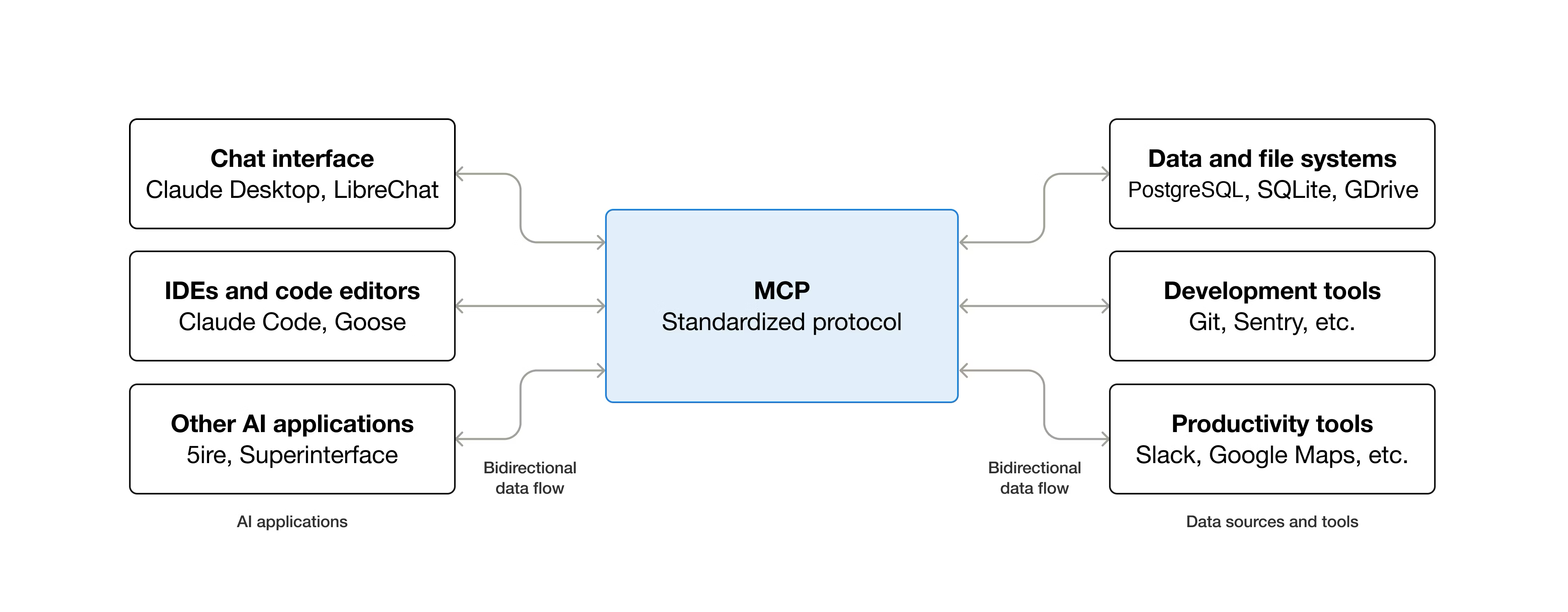

Source - What is an MCP and what does it mean for this attack? Think of an MCP as the glue between an LLM and any external tool (again, see diagram below). In this case, these tools were often commonly used open-source security tools to enumerate and exploit targets chosen by humans (though not specified, think things like nmap, exploitdb, Hashcat, etc).

Source

Source - Claude was NOT creating novel exploits. From the report: "The operational infrastructure relied overwhelmingly on open source penetration testing tools rather than custom malware development." The main takeaway should be that LLMs are being used to connect intelligence about targets, and then use existing tools to operationalise that intelligence. This is very interesting, but importantly different than generating totally novel attacks. Another quote to hammer this home: "The minimal reliance on proprietary tools or advanced exploit development demonstrates that cyber capabilities increasingly derive from orchestration of commodity resources rather than technical innovation."

- Lastly, it is not clear what a successful intrusion means in this context. Obviously, details are sparse, but it isn't clear what (if any) goals were achieved by this group. For instance, I would be interested to know how well this kind of LLM-headed framework would be able to evade detection by defense teams for extended periods.

The actual meat of this report should be extremely interesting for governance actors and cyber defense teams, but it is probably lacking enough specific information to turn into meaningful technical adjustments on the ground. For instance, were there any specific markers that cyber defenders may be able to use to detect this kind of behavior? How long did it take for defense teams to detect this behavior once they had a foothold? These kinds of details would go a long way to making a report like this more useful for the community. What concerns me more, though, is how this report (and its accompanying blog) frame the event, and how this framing will be consumed downstream in AI safety reporting.