[!NOTE] Hopefully this piece will be the first in a series of posts outlining what I'm thinking about during my MSc dissertation. I'm envisioning this to be more like a sandbox than an academic work, so citations will be rough and ideas not fully formed. I'll try to give some background in this post about how I've landed near my research question, but expect future posts to be a bit more to the point.

As always, if you want to chat more about anything that sits in here, please find me in any way you see fit.

First: Sorting out a research question

When chatting with my dissertation advisor, Jack Stilgoe, he talked about how difficult it can be to nail down a coherent (and stable) research question. The advice which stuck was to tune-in to the initial tension that caught your attention, and let that guide your inquiry. What bugged me about AI safety so much that I decided to move to London to study this? Well, the question that has been nagging at me all this time is more or less this: Are safety assessments actually making AI models safer?

This question has plenty baked into it. How do we define safety? Who is the "we" that defines it? What metrics can we use to measure safety? What is safe enough? What isn't? Is it possible to make these decisions with our current suite of assessments? These questions could drone on, but you get the idea. There is a lot of ground to cover.

All these words arrive on the back end of an MSc degree in Science, Technology and Society in the department of Science and Technology Studies (STS) at University College London (UCL)^. I've been working for a few months now at the UCL Centre for Responsible Innovation (CRI) trying to wrap my head around what innovating responsibly actually means, and how those practices actually make it into the world. I am still wrapping my head around it, and don't think that will change any time soon.

At the CRI, we've been talking about themes in Responsible Innovation at UCL, and one that has stuck out for this project is "Looking Upstream". Essentially it means engaging with technology not at its endpoint, but where the interesting decisions are made. In the case of my research, that means people typing on keyboards at big tech companies like OpenAI, government institutes like the UK AI Security Institute (AISI), assessors like Holistic AI, and elsewhere. So, how does one look upstream AND start to answer the question of whether AI safety assessments are making us safer? For one, shrinking the question helps. What also helps are advisors and other kind souls who are willing to let me talk about this at length. The combination of these two things and my background in cybersecurity has been landing somewhere in the ballpark of this more manageable question: Are tools for AI red teaming designed for social good?

This question still has plenty to unpack, but let's start like any good STS MSc, with some time exploring a crucial definition.

Dissertation Log #1: Definitions and History of AI Safety

There are a few things worth defining in order to answer my semi-refined research question - AI, safety, harm, social good, red teaming, etc. I'll start with the obvious STS preface here...who gets to set any of these definitions matter. This is more clear for some things than others. A governance actor that defines safety thresholds for access to public markets has a lot of power for obvious reasons. Definitions will inherently include values and visions of the future, which offer rich ground for exploration. I'll leave most of these definitions for the big paper, but for now I'll start with an obvious one: AI Safety.

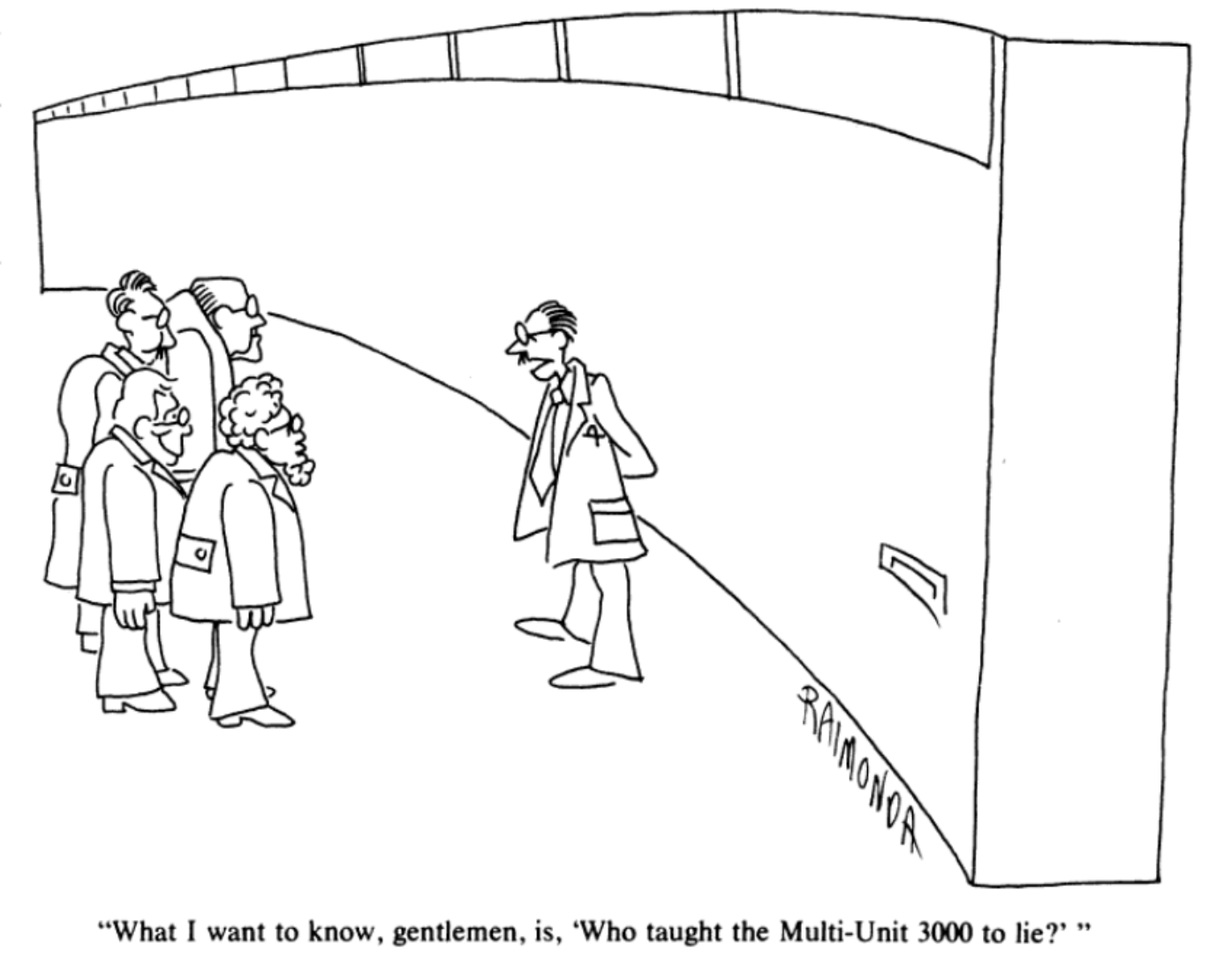

It could be because of my studies/background/location, but it feels like "AI Safety" is inescapable as a research topic right now, even though it can hard to sort out what it means exactly. What is slightly more interesting is that, though it may not feel it, it isn't a particularly new concept. This paper from Joseph Weizenbaum is from 1978, and, includes some laughable predictions about the future (Jack Stilgoe points out this one: "Will the home computer be as pervasive as today's television sets? The answer is almost certainly no"). However, it also reads at times like any one of the op eds on AI safety you may find in your newsfeed. I mean, look at this comic:

You cannot tell me that if you replaced "the Multi-Unit 3000" with the name of your preferred AI system that this would not play in the NYT this week. In his paper, he hits a lot of talking points that are more sociotechnical in nature - situating AI (and computing) in different contexts, considering the power it holds (and grants), and engaging with promises of AI against the uncertainty of its capabilities. It's a definition of safety that focuses on presently observable social harms. In a more canonical "AI Safety" moment, Alan Turing famously lamented that intelligent computers would someday "outstrip our feeble powers" and pointed towards a future which could mirror the dystopian Samuel Butler novel Erewhon from 1872. Erewhon hits on themes of machine consciousness and self-replication/improvement even in the 19th century. Butler and Turning imply a different definition of safety - one that is more concerned with an existential threat to humanity. All this to say, concerns about existential and sociotechincal risk from AI has been here much longer than we might think.

Bringing us to the present, this paper outlines a budding tension between the AI Safety (Erewhon-leaning) and AI Ethics (sociotechnical-leaning) epistemic communities. The authors argue that AI Safety discourse is dominated by visions more similar to Erewhon than Weizenbaum, and come from a very specific community of thinkers. While there are clearly those who are concerned about the sociotechnical risks of AI that a present, my intuition tends to agree with that premise. Organizations with names like "The Future of Life Institute", "The Centre for Long Term Resilience", and the "Center for AI Safety (CAIS)" are constantly in my newsfeeds with reports suggesting we need to seriously consider things like "alignment" to mitigate risk to future generations of humanity. Further, these organizations have raised hundreds of millions to research the AI Safety conception of risk, and have proliferated it far and wide.

This paper (written by some folks in the AI Safety community) does well to taxonomize "AI Safety" risks into four categories: Alignment, Monitoring, Robustness, and Systemic Safety. These categories have been helpful for me when trying to sort initiatives into the "Safety" or "Ethics" bucket. Papers like this also give a sense that the field of AI Safety is being built right now. One doesn't need to look far to see organizations touting "alignment" to human values, and how we might find novel ways to codify it in policy. In the context of my semi-stable research question (Are tools for AI red teaming designed for social good?), it points me towards better understanding how assessment tools attempt to define "humans values", or unpacking some epistemic logics behind metrics and benchmarks established for safety. It also offers new questions: Is "AI Safety" a totally novel field? Are there historical conceptions of safety that might be helpful in our current investigations? What can these investigations tell us about whether we are making models "safer"?

This post is already much longer than intended, and it has mostly covered a topic I had no intention to discuss, so I'll wrap up here with some important thoughts to preface whatever comes next. Careful consideration must be given to which conceptions of safety we prioritize, and how they are leading to tangible safety programs and technology policy. I want to be clear that I am not saying that the concerns of the AI Safety community shouldn't be prioritized - I think they are important, and I take very seriously the fact that many AI researchers are concerned about emerging capabilities. But, relying on one conception over another might make it more difficult to develop AI systems that truly benefit all of humanity, a goal often seen floating around these technologies. Outlining the limits of our assessment capabilities and clearly communicating them to the public will go a long way towards making AI safety a democratic conversation and not one that exists only in elite research institutions.

Ideas for topics to come

- The 2010s AI Safety boom - where did that come from?

- A brief taxonomy of social harms from AI

- A history of red-teaming and other safety assessments

- An "AI Safety Assessment Field Report"

- The politics of benchmarks

- Snapshot of AI safety research at elite institutions (Do a certain conception of risk get the money?)

- Alignment, Monitoring, Robustness, Systemic Safety - AI safety research re-imagined as AI ethics

Things that are impacting my thinking right now (that I thought this post would be about)

| Title | Link |

|---|---|

| AI As Normal Technology | https://knightcolumbia.org/content/ai-as-normal-technology |

| Red-Teaming in the Public Interest | https://datasociety.net/library/red-teaming-in-the-public-interest/ |

| AI and the Everything in the Whole Wide World Benchmark | https://arxiv.org/abs/2111.15366 |

| Field Building and the Epistemic Culture of AI Safety | https://firstmonday.org/ojs/index.php/fm/article/view/13626/11596 |

Many things I've been looking at lately (in chaotic order):

^sorry for the onslaught of initialisms, they will continue relentlessly in these posts