[!NOTE] This post is a bit longer and more scattered. On reflection, it is about setting the stage on how different approaches to risk frame assessments. I'm still in the market for some interviewees, so if you or anyone you know are working in the AI safety assessment space, please do reach out!

Dissertation Log #2: Bed Nets, Nuclear Reactors and Technocratic Framing of Risk

This week I attended a department research seminar on how we frame the problem of Neglected Tropical Diseases (NTDs). I listened as folks discussed the "medicalization" of global health - a process by which human problems tend to turn into medical problems - and things started sounding familiar. Many of the sticky questions from my last post are present in NTDs in relation to global health: who defines global health? How do we measure it? Are NTDs the best way to measure health? Or just the easiest? Which discourse is dominating global health policy?

NTDs offer a starting point for me to tie together a few interesting threads from my intellectual history, AI safety, and a sticky problem of responsible innovation. A question I began pondering towards the end of my last post was whether AI Safety is presenting new problems when it comes to risk. It is often framed as such, but where else might we look for ideas on how to approach safety?

Metrics: EA and NTDs

One place to start is with global health movements surrounding NTDs. One group which has dominated philanthropic discourse has been the Effective Altrusim (EA) movement. EAs are remarkably clear when it comes to their aims in global health - they want to give as efficiently as possible to do the most good. This means identifying areas which maximize their 'Importance, Tractability and Neglectedness’ (ITN) framework. I argue that all three of these terms, while sounding objective, contain values. Important to who? Compared to what? They also require measurement. How does one measure tractability? At what cost is a cause tractable? By what metric can we consider a disease neglected?

EAs try to answer these questions by producing metrics which measure the things they care about. In the case of GiveWell, a central EA organization, this means measuring outcomes in terms of lives saved (or lives improved) per dollar spent. It can also mean thinking about things like forecasting probability or magnitude of impact. Once these metrics exist, they allow for the calculation of cost, likelihood and impact. This makes it possible to rank organizations and their impact "objectively". If organization X can save a life for $4,000, and organization Y for only $3,000, their decision is made for them by the numbers. In the case of malaria, EA sees an enormous opportunity to cheaply save lives by distributing bed nets. This paper rightly points out that EA even managed to re-define what "neglect" meant in the context of disease (malaria is not traditionally considered an NTD), highlighting the politics of some of their measurements.

Where this lines up with AI Safety is in a trick of longtermism - incorporating future generations into their calculations of cost and benefit. I'm sure somebody will clobber me for this description, but the basic ideas is this: If EA cares about lives saved per dollar spent, then considering saving nearly infinite future lives, even in low probabilities, makes the cost-benefit ratio quite compelling in x-risk scenarios. Numbers and metrics play a crucial role in the thinking here. Both their view on NTDs and AI Safety rely on our ability to measure, analyze, and rank risk objectively.

A more quantified framing might suggest that numbers and metrics should be taken as objective and are thus apolitical in nature. This Clark paper (and the rest of the articles in that issue) are the other side of the NTD debate, and present compelling arguments against the kind of quantification seen in global health at present. This presents an opportunity to fall down an STS rabbit hole about measurements, metrics and objectivity, but I will resist rehashing an already battered argument. While there is a deep well of case studies in which quantification has fallen short of objectivity, I think more interesting is how it relates to patterns in approaching and addressing risk. My last post started to outline this phenomenon in AI, but likewise in global health, there exists a tension between two different epistemic communities: one that trusts in metrics/technology and one that argues for a bit more context. I'll take another detour to cover another instance of this tension, this time towards the history of nuclear safety, before making our way back to why this matters for assessing risk.

Risk Approaches: AI and Nuclear Safety

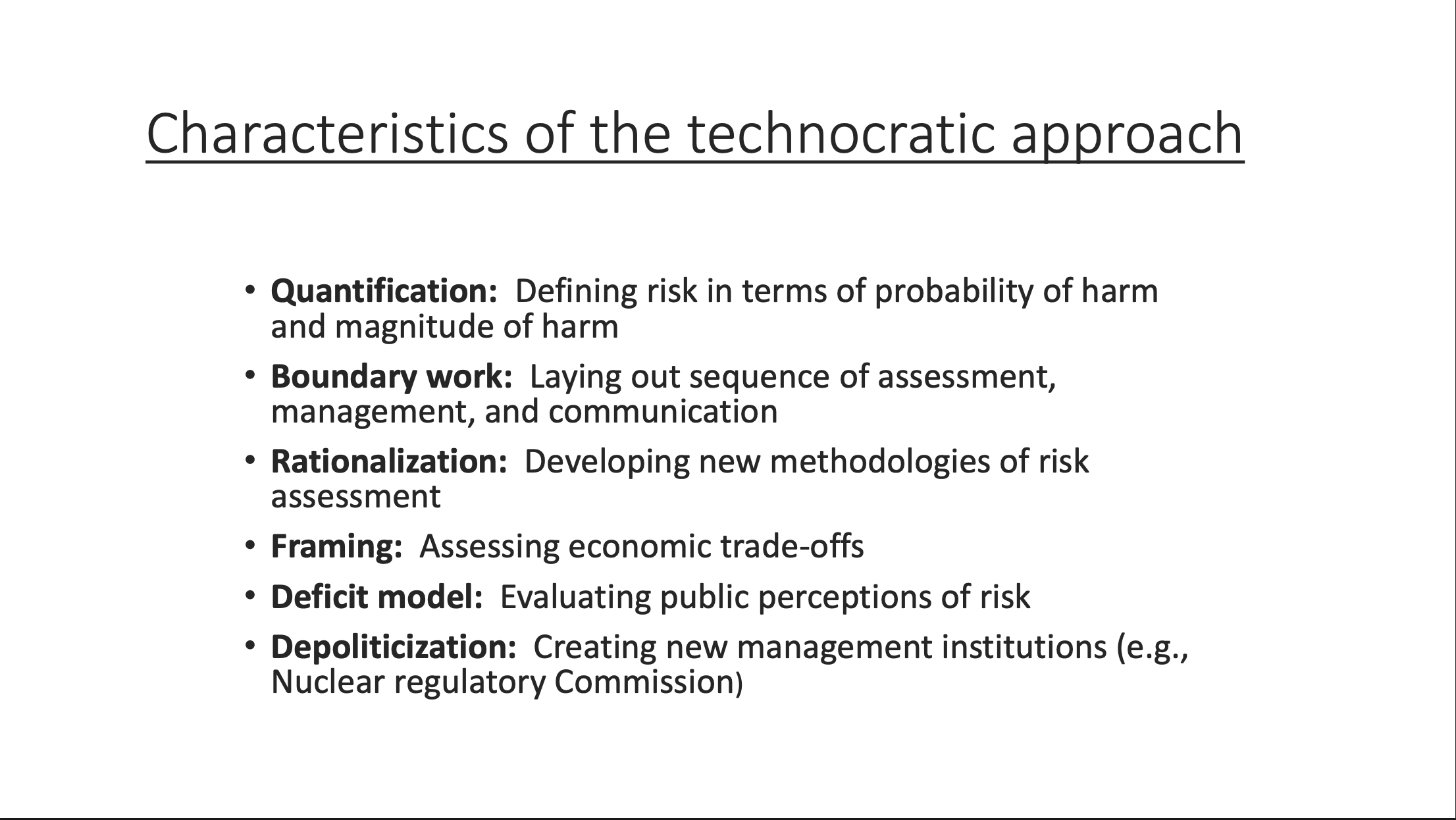

I started this post asking if AI safety is presenting particularly novel tensions when thinking about risk. As we've discussed above, NTDs present similar questions and solutions in our present moment. If we look a bit further into our past, we might also recognize similar patterns of definition, risk assessment, and hype with the emergence of nuclear capabilities. Sheila Jasanoff points out eerily similar dynamics in this talk, describing two approaches to nuclear risk: the technocratic, and the social-cultural. She highlights a longstanding tension in the fields of risk assessment and risk management. One slide sums up the technocratic approach quite well:

Looking at the above, it becomes easy to notice some similarities - probabilities and magnitudes, calls for new methodologies for risk assessments, framing things in cost-benefit. All these sound familiar when discussing AI or NTDs. Again, I'm not necessarily trying to convince anyone that the social-cultural approach is superior, but rather pointing out that the tensions in discussions of risk have followed similar patterns across technologies and over time.

Something I might add to the above slide would be how hype and uncertainty tends to factor into risk assessments. 80,000 hours sent out a piece just yesterday (June 6th, 2025) on why AGI might still be decades away, which already has some assumptions built into the title. Namely, that this is actually a conservative estimate for when AGI may arrive, and in fact it will be much sooner. It struck me to compare it with this quote from John F. Clarke, deputy associate director for fusion at the US Department of Energy in 1980:

"We are confident we will have a package we can deliver [for nuclear fusion] in 10 years. I'm really excited about it"

Here we are, almost 50 years later and similar promises are still being made about fusion. All this to say, when dealing with a new technology, especially one of transformational capacity, it is common to envision low-probability but high-consequence capabilities that are just around the corner.

In the case of AI Safety, this has meant not only envisioning novel capabilities but also projecting novel risks. All this talk of novelty, but it is easy to argue that the prediction of new risks is actually entirely predictable! So what might we do with the idea that, while these risks themselves may be generally novel, our experience of assessing risks presented by an emerging technology is not?

AI as a Normal Technology with Normal Accidents and Normal Risks

Steve Rayner calls the cycle of hype, skepticism, and reassurance the "novelty trap" in emerging technology. In his cycle, a new technology arrives with the promise of unbelievable benefits, is then flooded with claims of new risks, and is eventually downplayed to assure the public that it is really not too dangerous. In this cycle for AI, we are potentially entering the skepticism phase, which means next up should be the "we have been doing this for years" phase (people said that nuclear was just a "new way to boil water" here). I'm interested to see if the hype and egos around AI will allow that phase to arrive, but there are some useful ways (new and old) to think about AI as non-revolutionary.

This paper argues that we might consider AI as "normal" in the present and future. The authors are explicit that they aren't arguing against AI as a transformative technology, but rather that we need not frame AGI as inevitable (or even necessary) for it to cause harm. They argue that many factors (both technical and not) will slow AI's progress. They also point out that humans will likely remain in control of AI systems during their design and implementation by necessity. If we take this "normal" view of AI, we can consider risk to be systemic, giving us access to a rich history of risk assessment frameworks for reference.

A socio-cultural approach to risk might assert that governing emerging technologies is an inherently social process—an argument that has been made since the 80s. A book that has been shaping my thinking on this is Normal Accidents by Charles Perrow. His central claim is that many high-risk technologies—like nuclear power plants, space shuttles or recombinant DNA—have inherently complex and tightly-coupled characteristics which inevitably result in failure. This doesn't mean failures are common or predictable, but rather that a goal of zero failures may not be reasonable. If we abandon the idea that we can totally prevent accidents, we can also shift our approach to safety away from probabilistic calculations and towards a more qualitative analysis. This opens us up to questions of power and context. Instead of interrogating "How likely is harm?" we might ask "How complex, tightly coupled, and politically fraught is our system?"

This leaves us with some questions to explore further for AI safety. What assessments can deal with this kind of systemic complexity? Where are globalized useful (and not)? How are people actually treating safety assessments in reality? Even if more technocratic assessment tools dominate, it does not mean that people aren't also considering context when using them. Understanding what tools are available and how these tools are used "in the wild" will offer new ground to explore.

Ideas for topics to come

- The 2010s AI Safety Boom - where did that come from? ✔

- A brief taxonomy of social harms from AI

- A history of Red-teaming and other safety assessments (up next?)

- AI Safety Assessment Field Report (up next?)

- The Politics of Benchmarks ✔

- Snapshot of AI Safety Research at Elite Institutions (Towards which conception of risk do research dollars flow?)

- Alignment, Monitoring, Robustness, Systemic safety - AI safety research re-imagined as AI ethics

- What do numbers do for us? Securitization and Simplification of Risk (up next?)

Things that especially informed this post:

| Title | Link | Author |

|---|---|---|

| Normal Accidents | https://press.princeton.edu/books/paperback/9780691004129/normal-accidents | Charles Perrow |

| Effective altruism, technoscience and the making of philanthropic value | https://www.tandfonline.com/doi/full/10.1080/03085147.2024.2439715 | Apolline Taillandier, Neil Stephens, Samantha Vanderslott |

| Bridging the Two Cultures of Risk Analysis | https://onlinelibrary.wiley.com/doi/10.1111/j.1539-6924.1993.tb01057.x | Sheila Jasanoff |

Everything from this week :

| Title | Link | Author |

|---|---|---|

| Medicalization of global health 1: has the global health agenda become too medicalized? | https://pmc.ncbi.nlm.nih.gov/articles/PMC4028930/ | Jocalyn Clark |

| Effective altruism, technoscience and the making of philanthropic value | https://www.tandfonline.com/doi/full/10.1080/03085147.2024.2439715 | Apolline Taillandier, Neil Stephens, Samantha Vanderslott |

| Safety Co-Option and Compromised National Security: The Self-Fulfilling Prophecy of Weakened AI Risk Thresholds | https://arxiv.org/abs/2504.15088v1 | HEIDY KHLAAF, SARAH MYERS WEST |

| Law and the Emerging Political Economy of Algorithmic Audits | https://osf.io/preprints/lawarchive/xvqz7_v1 | Terzis, Veale, and Gaumann |

| Nuclear Risk, STS, and the Democratic Imagination | https://www.nationalacademies.org/documents/embed/link/LF2255DA3DD1C41C0A42D3BEF0989ACAECE3053A6A9B/file/DFB8EB42BA0E8A451D6633DF247763AD40F1A099B670?noSaveAs=1 https://www.youtube.com/watch?v=fEfowN7e-Jg&ab_channel=TheNationalAcademiesofSciences%2CEngineering%2CandMedicine |

Sheila Jasanoff |

| Bridging the Two Cultures of Risk Analysis | https://onlinelibrary.wiley.com/doi/10.1111/j.1539-6924.1993.tb01057.x | Sheila Jasanoff |

| Normal Accidents | https://press.princeton.edu/books/paperback/9780691004129/normal-accidents | Charles Perrow |

| Metrics: What Counts in Global Health | https://read-dukeupress-edu.libproxy.ucl.ac.uk/books/book/78/MetricsWhat-Counts-in-Global-Health | Vincanne Adams |

| Trust in Numbers: The Pursuit of Objectivity in Science and Public Life | https://press.princeton.edu/books/paperback/9780691208411/trust-in-numbers | Theodore Porter |

| AI As Normal Technology | https://knightcolumbia.org/content/ai-as-normal-technology | Narayanan and Kapoor |

| The Novelty Trap: Why Does Institutional Learning about New Technologies Seem So Difficult? | https://eric.ed.gov/?id=EJ682697 | Steve Rayner |